By Shawn Hayward

The eyes are nothing without the brain. While the eyes capture the photons reflecting off our surrounding environment, it’s the brain’s job to turn that information into something meaningful, something that helps us survive and prosper.

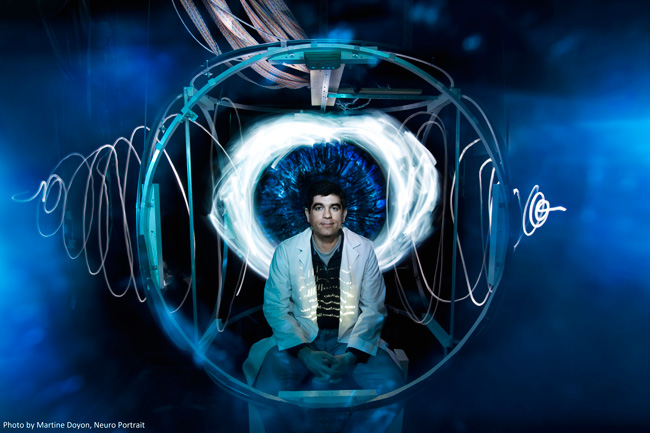

At the lab of Christopher Pack of the Montreal Neurological Institute and Hospital, the focus is on visual perception, specifically, how neurons communicate with one another to decipher what the eyes are seeing. Focusing on the visual cortex, the “Pack Lab” uses microelectrode recordings to listen to individual neurons as they talk to their neighbours.

When you consider that a neuron is about 20 microns (0.02 of a millimetre) in diameter, and that there are 100 billion neurons in the human brain, you understand what a feat listening to one individual neuron can be.

Neurons talk in “code” to each other, and deciphering this code is a big part of what the Pack Lab does. By understanding the code, they can determine how what we see affects what happens in our brains.

It’s important because vision is not as straightforward as it seems. For example, common sense would tell you that as an object gets bigger, the better able we would be to determine its direction of motion. That is correct, but only up to a certain point. About 15 years ago, psychophysical research by Dr. Duje Tadin and colleagues revealed that at about eight degrees of visual angle, our ability to perceive the direction of a moving object actually drops as that object gets bigger.

Researchers found that this effect was true in healthy brains, but in people with certain psychological conditions, the elderly, and those with low IQ, the effect was weaker or non-existent. This means that people generally considered to have brain impairments could detect the direction of large moving objects better than the rest of us.

“All these people who are ostensibly impaired somehow, are actually better at this task than these people who are in the prime of their life,” explains Pack.

Following up on this research, Pack’s lab tested monkeys for their ability to see moving objects, because the reactions of a healthy monkey to visual stimuli are similar to that of a human. They also recorded from single neurons in the middle temporal (MT) area of the visual cortex; this area is thought to be the brain center responsible for perceiving moving objects. The researchers found that MT neurons were firing as normal to the large stimuli, yet as predicted, the monkeys were having difficulty detecting the movement of increasingly large objects. In fact, some individual neurons seem to encode the stimuli better than the monkeys did.

What the Pack Lab researchers found was that as the stimuli size increases, so too does the neural “noise” produced. Noise is the part of signal not directly reflecting what is actually being seen. It could be compared to static on a TV screen, distorting the actual broadcast. Their work was published in the journal eLife Sciences on May 26, 2016.

The Pack Lab found that when the stimulus was large, many different MT neurons were repeating the same noisy signal. This kind of noise is very detrimental to the neural code.

“What really hurts the ability to encode a stimulus is noise, and especially noise that is correlated across different neurons,” says Pack.

By studying the mathematical relationship between the noise and the monkey’s perception, they found that the visual cortex attempts to suppress this noise, and in the process it often shuts off the responses to large stimuli entirely.

This suppression is more effective in a healthy human brain, because it helps the brain process visual stimuli, filtering out what is unnecessary. This may explain why people with neurological impairments see large moving objects better. Ironically, in this case the filtering works against the observer.

Strangely enough, understanding this process could help researchers find ways to compensate for brain impairments and improve their vision.

“If the suppression of this noise is the underlying mechanism for our failures in perceiving motion, then we would like to manipulate this suppression with new techniques in the future,” says Dave Liu, a Pack Lab researcher and the paper’s lead author. “This could provide a way of understanding what goes wrong in various diseases, such as schizophrenia and autism.”

June 22, 2016